This blog will dive into using GPT-3 for Natural Language to SQL (NL2SQL) conversion. The article provides an overview of NL2SQL conversion and its challenges, an introduction to GPT-3 and its capabilities and limitations, and a step-by-step guide to fine-tuning GPT-3 for a custom use case. The guide includes detailed explanations along with code snippets for NL2SQL conversion. This article is designed for developers, data scientists, and anyone interested in using advanced language models for NL2SQL conversion.

Introduction:

Natural Language to SQL (NL2SQL) converts natural language questions into structured SQL queries for database systems, making extracting information easier for non-technical users.

On the other hand, GPT-3 is a large language model by OpenAI that generates human-like text and can be used for a wide range of applications, including text summarization and question answering.

Background:

Natural Language to SQL (NL2SQL) conversion has several existing solutions, such as AI2SQL, OpenAI Codex, Google's Cloud Natural Language API, and SQLizer. Each solution offers different features and supports various SQL commands and databases. The right solution will depend on the user's specific requirements and use case.

AI2SQL is a framework for converting natural language into SQL, but its open-source design may limit its ability to process complex SQL commands and databases that are not widely used. The OpenAI Codex has limitations in its ability to be customized, which might not work well for complicated and unique situations. Google's Cloud Natural Language API may not be precise enough or provide complete answers to natural language queries. SQLizer can only convert data from CSV or Excel spreadsheets into SQL and is unsuitable for more sophisticated NL2SQL conversion tasks.

Developing a custom solution using GPT-3 might be necessary because existing solutions may not provide the level of accuracy and customization that you require. Some existing solutions may have limited support for SQL commands and databases, and may not be suitable for advanced NL2SQL conversion tasks.

With GPT-3, you have more control over the accuracy and customization of the NL2SQL conversion process, and the language understanding capabilities of the model allow you to support SQL commands and databases. The advantage of GPT-3 is that you can use its language understanding capabilities to meet specific requirements that cannot be met by relying on existing solutions.

Natural Language to SQL using GPT-3 prompt:

GPT-3 prompt available on the OpenAI playground can be used to convert the natural language to SQL. People uncomfortable with SQL can use plain English to retrieve data from a database.

However, it is essential to note that the SQL query generated will be generic and may not precisely fit the desired use case due to a lack of detailed information. Additionally, the accuracy of the generated SQL query may not be high.

GPT-3 Parameters:

To improve accuracy to a specific limit, GPT-3 parameters can be adjusted in Playground for Natural Language to SQL conversion, including model, temperature, max tokens, frequency penalty, presence penalty, and top p.

Model refers to the name of the model. Temperature(usually 0.7 - 0.9) denotes the randomness of the response. Higher temperatures can boost creativity in generated responses, making it better for generation tasks. A lower temperature is better for prediction tasks like sarcasm detection, as it produces more consistent results.

The max tokens parameter limits how many words the response can have. The frequency penalty controls how often certain words or phrases are repeated. The presence penalty tries to avoid using words that don't fit the question's context. The top p parameter controls how different the responses can be.

Pros:

The advantage of GPT-3 for Natural Language to SQL conversion is that it can save time and effort by automating the translation process. Non-technical users who may not be proficient in SQL can use GPT-3 to query a database. However, the generated query's accuracy depends on the quality of the input prompt and GPT-3 parameters used.

OpenAI Playground:

Let's see an example of how GPT-3 can be used to translate natural language statements into SQL statements for the "customers," "orders", and "products" tables in our database.

Suppose we have the following schema for our tables:

CREATE TABLE `customers` (

`id` INT NOT NULL AUTO_INCREMENT,

`name` VARCHAR(255) NOT NULL,

`email` VARCHAR(255) NOT NULL,

`phone` VARCHAR(20) NOT NULL,

`address` TEXT NOT NULL,

PRIMARY KEY (`id`)

);

CREATE TABLE `orders` (

`order_id` INT NOT NULL AUTO_INCREMENT,

`customer_id` INT NOT NULL,

`order_date` DATE NOT NULL,

`total_amount` DECIMAL(10,2) NOT NULL,

PRIMARY KEY (`order_id`),

FOREIGN KEY (`customer_id`) REFERENCES `customers` (`id`)

);

CREATE TABLE `products` (

`product_id` INT NOT NULL AUTO_INCREMENT,

`name` VARCHAR(255) NOT NULL,

`category` VARCHAR(255) NOT NULL,

`price` DECIMAL(10,2) NOT NULL,

`description` TEXT NOT NULL,

PRIMARY KEY (`product_id`)

);

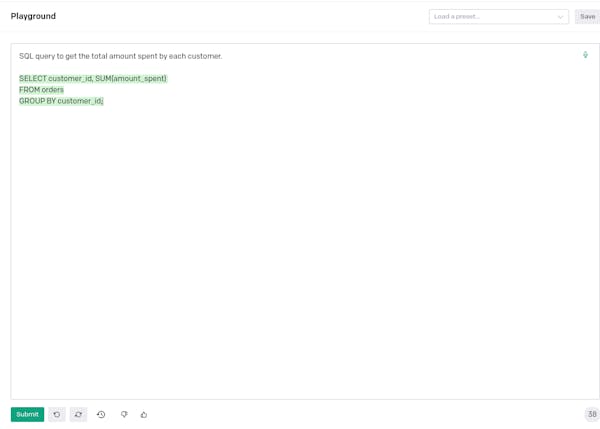

As we can see, the response generated by GPT-3 is not specific to our database schema.

SQL query to get the total amount spent by each customer.

SELECT customer_id, SUM(amount_spent)

FROM orders

GROUP BY customer_id;

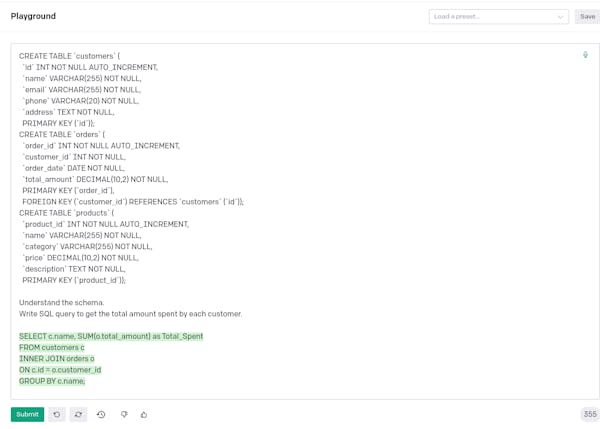

Now, let's provide more specific information to GPT-3 by including our database schema.

SQL query to get the total amount spent by each customer.

SELECT c.name, SUM(o.total_amount) AS Total_Spent

FROM Customers c

INNER JOIN Orders o ON c.id = o.customer_id

GROUP BY c.name;

The response becomes more accurate when provided with the schema. However, providing the schema every time a SQL query is needed is not feasible.

Therefore, fine-tuning GPT with custom data is a solution to this problem. The process of fine-tuning GPT-3 for SQL query generation will be discussed below.

Fine-tuning GPT-3 for Custom Natural Language to SQL:

Finetuning is when a pre-trained large language model like GPT-3 is further trained on task-specific data to adapt it to a specific task or domain. The objective of finetuning is to improve the model's performance by providing more relevant examples and information specific to the task at hand.

Let's say you want to use GPT-3 to translate natural language into SQL. But you realize that GPT-3 doesn't understand the structure of your specific database. One way to help GPT-3 is to tell it about your database(like the above prompt-based), but it still might not work perfectly.

The best way to ensure it works well is to fine-tune it using your data. This will ensure that GPT-3 understands precisely what you need it to do.

Steps involved in fine-tuning GPT-3 for Natural Language to SQL:

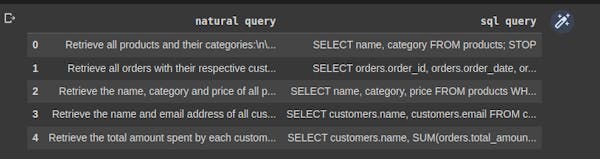

Prepare a dataset of prompts and corresponding SQL queries and upload it to your working directory. It is recommended to have at least a few hundred training examples for consistent and accurate responses.

Import necessary modules for data preprocessing and fine-tuning, such as pandas, OpenAI, etc.

pip install --upgrade openaiimport pandas as pd import numpy as npLoad the dataset and perform any necessary preprocessing steps, such as removing white spaces or other formatting issues.

data = pd.read_csv("/content/prompt and intent.csv", header=None) data['natural query'] = data['natural query'].str.strip() data['sql query'] = data['sql query'].str.strip()Establish rules for formatting the prompts and SQL query completions, such as using a simple separator like

\\n\\n###\\n\\nin the prompts and starting the completions with white space and an end symbol like###or aSTOPkeyword.data['natural query'] = data['natural query'] + "\n\n###\n\n" data['sql query'] = " " + data['sql query'] + " STOP"

Use pandas to convert the training data into JSON Lines format, compatible with GPT-3 fine-tuning.

data.to_json("p_and_c.jsonl",orient='records',lines=True)Install the OpenAI API and use the CLI to validate the training data and obtain suggestions.

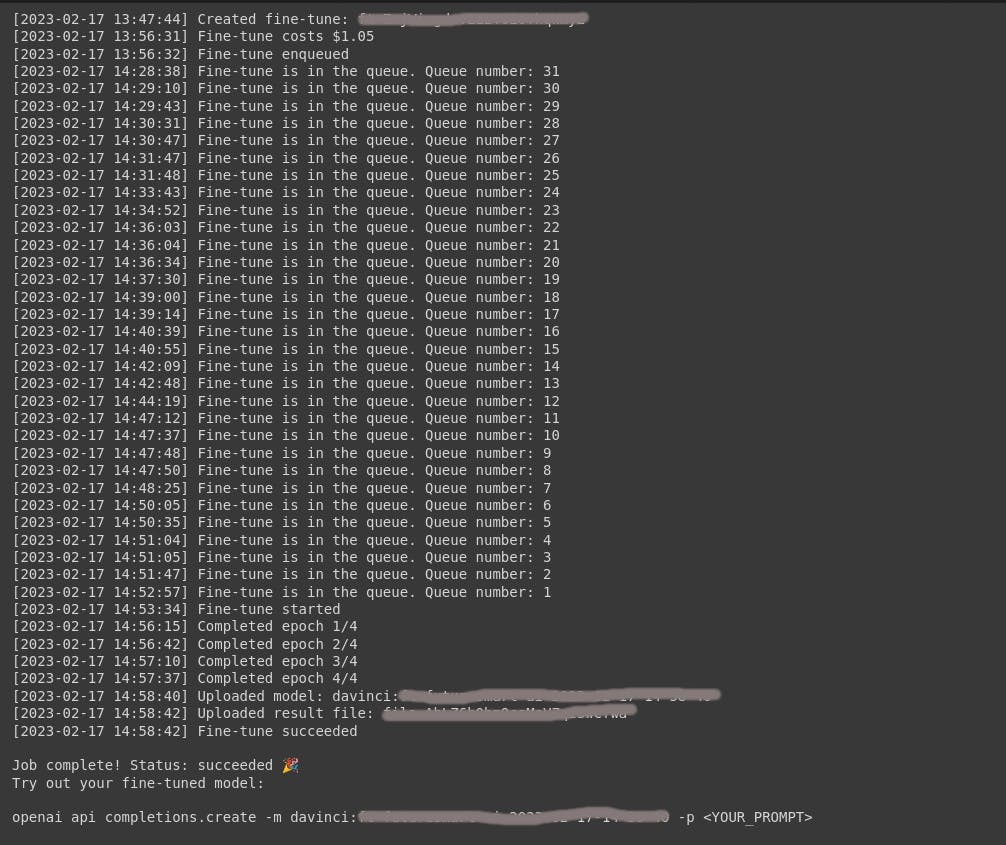

import os os.environ['OPENAI_API_KEY'] = ''openai tools fine_tunes.prepare_data -f p_and_c.jsonlTrain a new fine-tuned GPT-3 model using the OpenAI API, using your API key and the CLI to create and monitor the training process.

openai api fine_tunes.create -t "p_and_c.jsonl" -m 'davinci'!openai api fine_tunes.follow -i "fine-tuned model unique identifier"

Once training is complete, format your prompts for inference as you formatted them for training.

prompt = "Retrieve the name and total amount of the customer who placed the order with the highest total amount\n\n###\n\n"Use the fine-tuned GPT-3 model for natural language to SQL inference, customizing further as needed by modifying the model's parameters.

import openai openai.api_key = os.getenv("OPENAI_API_KEY") response = openai.Completion.create( model="model name", prompt=prompt, temperature=0.7, max_tokens=256, top_p=1, frequency_penalty=0, presence_penalty=0, stop= [" STOP"] )print(response['choices'][0]['text']) SELECT customers.name, MAX(orders.total_amount) AS total_amount FROM customers JOIN orders ON customers.id = orders.customer_id GROUP BY customers.name ORDER BY total_amount DESC LIMIT 1;

For instructions on Fine-Tuning GPT-3, you can refer to this video.

Also, AIDemos.com is an incredible resource for anyone looking to explore the potential of AI.