Natural Language Processing (NLP) is a field of artificial intelligence that focuses on enabling computers to understand and process human language. One of the key techniques used in NLP is finetuning, which involves adapting a pre-trained language model to a specific task by further training it on task-specific data. Finetuning is important in NLP because it allows for more accurate and efficient natural language processing.

Hugging Face Transformers is a popular library for NLP that provides pre-trained language models based on the transformer architecture, which has revolutionized NLP in recent years by enabling the development of models that can process entire sentences and paragraphs at once. In this blog post, we will guide finetuning Hugging Face Transformers using BERT, one of the most widely used pre-trained language models.

We'll cover the importance of finetuning, the popularity of Hugging Face Transformers, understanding BERT, dataset and preprocessing, fine-tuning BERT, and evaluating the fine-tuned model. By the end of this post, you'll know how to finetune Hugging Face Transformers using BERT for NLP tasks. This guide is intended to be comprehensive and provide a solid understanding of finetuning Hugging Face Transformers using BERT.

BERT and its significance in NLP

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a pre-trained language model developed by Google in 2018. It is a deep neural network architecture based on transformer architecture, capable of processing and understanding natural language text.

What makes BERT significant in NLP is its ability to understand the context and meaning of words within a sentence, or even an entire document, rather than simply treating each word as a standalone entity. This is due to its bidirectional nature, where the model is trained to consider both the preceding and following words in a sentence when making predictions.

BERT Architecture and pre-training

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a language model developed by Google in 2018. It is a type of Transformer-based neural network architecture that uses an attention mechanism to process sequential input data. The architecture of BERT consists of a series of transformer blocks where each block contains a multi-head attention layer and a feed-forward neural network.

The model uses a bidirectional approach, which means that it can learn the context of a word by looking at both the left and right context of the word. This helps the model to better understand the meaning of the words and the relationships between them.

BERT is pre-trained on a large corpus of text using two unsupervised learning tasks. The first task is called Masked Language Modeling (MLM), where the model is trained to predict a randomly masked word in a sentence given the surrounding context. This task helps the model learn the contextual relationship between words in a sentence.

The second task is called Next Sentence Prediction (NSP), where the model is trained to predict whether two given sentences are consecutive or not. This task helps the model learn the relationships between sentences in a document.

The pre-training process is carried out on massive amounts of text data, which enables the model to learn general language understanding. Once the model is pre-trained, it can be fine-tuned on specific NLP tasks with smaller amounts of task-specific data. The fine-tuning process involves adding a classification layer on top of the pre-trained model and training the entire model on the task-specific dataset. This fine-tuning approach allows the model to learn the nuances of the specific task and achieve state-of-the-art performance on various NLP tasks.

Demystifying Model Fine-tuning for Improving Sentiment Analysis Performance:

Hugging Face provides an easy-to-use library called Transformers that make it simple to fine-tune pre-trained transformer models on a variety of natural language processing tasks. The library includes many pre-implemented scripts that can be used to fine-tune models on common tasks such as sentiment analysis, text classification, and question answering.

Fine-tuning a pre-trained transformer model from Hugging Face involves taking a pre-trained model and adapting it to a specific downstream task. This is done by training the pre-trained model on a task-specific dataset.

Here we explain the code for fine-tuning the model for the sentiment analysis task:

Dataset Link: kaggle.com/competitions/jigsaw-toxic-commen..

- Install the transformers library using

!pip install transformers -Uand import the required libraries:

!pip install transformers -U

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, recall_score, precision_score, f1_score

import torch

from transformers import TrainingArguments, Trainer

from transformers import BertTokenizer, BertForSequenceClassification

- Load the dataset containing toxic comments using

pd.read_csvand select only the relevant columns:

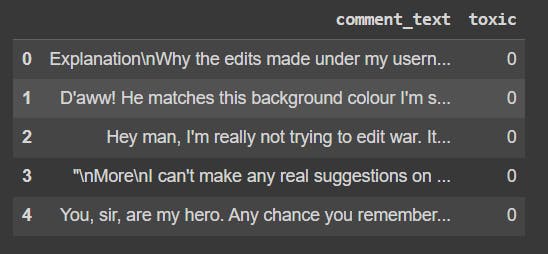

data = pd.read_csv("file_location",error_bad_lines=False, engine="python")

data = data[['comment_text','toxic']]

data = data[0:1000]

Output:

- Split the dataset into training and validation sets using

train_test_splitfrom sklearn. 80% of the data is used for training and 20% for validation:

X = list(data["comment_text"])

y = list(data["toxic"])

X_train, X_val, y_train, y_val = train_test_split(X, y, test_size=0.2,stratify=y)

- Tokenize the input sequences using the

BertTokenizerfrom the transformers library. Thepadding=True,truncation=True, andmax_length=512parameters ensure that all input sequences have the same length.

from transformers import BertTokenizer, BertForSequenceClassification

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

X_train_tokenized = tokenizer(X_train, padding=True, truncation=True, max_length=512)

X_val_tokenized = tokenizer(X_val, padding=True, truncation=True, max_length=512)

- Create a custom PyTorch dataset using the tokenized input sequences and corresponding labels:

class Dataset(torch.utils.data.Dataset):

def __init__(self, encodings, labels=None):

self.encodings = encodings

self.labels = labels

def __getitem__(self, idx):

item = {key: torch.tensor(val[idx]) for key, val in self.encodings.items()}

if self.labels:

item["labels"] = torch.tensor(self.labels[idx])

return item

def __len__(self):

return len(self.encodings["input_ids"])

train_dataset = Dataset(X_train_tokenized, y_train)

val_dataset = Dataset(X_val_tokenized, y_val)

In this snippet, we define a custom dataset class Dataset that takes the tokenized encodings and labels as input. The __getitem__ method returns a dictionary of tensors representing each input sample. We create instances of train_dataset and val_dataset using the tokenized datasets and labels.

- Define a function to compute evaluation metrics (accuracy, recall, precision, and F1-score) for the model:

def compute_metrics(p):

pred, labels = p

pred = np.argmax(pred, axis=1)

accuracy = accuracy_score(y_true=labels, y_pred=pred)

recall = recall_score(y_true=labels, y_pred=pred)

precision = precision_score(y_true=labels, y_pred=pred)

f1 = f1_score(y_true=labels, y_pred=pred)

return {"accuracy": accuracy, "precision": precision, "recall": recall, "f1": f1}

- Define the training arguments for the Trainer using

TrainingArguments:

args = TrainingArguments(

output_dir="output",

num_train_epochs=1,

per_device_train_batch_size=8

)

- Define the Trainer using the

Trainerclass from the transformers library, passing in the model, training, and validation datasets, and the evaluation metrics function. Then, we train the model usingtrainer.train():

model = BertForSequenceClassification.from_pretrained('bert-base-uncased', num_labels=2)

model = model.to('cuda')

trainer = Trainer(

model=model,

args=args,

train_dataset=train_dataset,

eval_dataset=val_dataset,

compute_metrics=compute_metrics

)

trainer.train()

Evaluate the model and make predictions on new inputs:

Here, we define the training arguments and initialize the

Trainerwith the BERT model, training arguments, and the training and validation datasets. We train the model usingtrainer.train()and evaluate its performance on the validation set usingtrainer.evaluate():

trainer.evaluate()

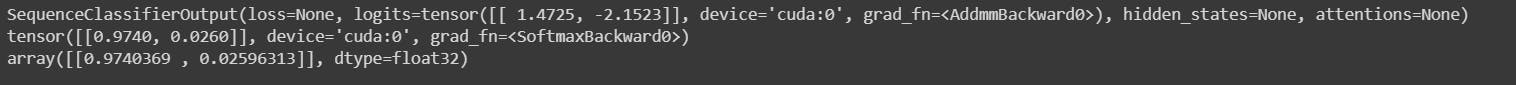

text = "That was good point"

inputs = tokenizer(text, padding=True, truncation=True, return_tensors='pt').to('cuda')

outputs = model(**inputs)

predictions = torch.nn.functional.softmax(outputs.logits, dim=-1)

predictions = predictions.cpu().detach().numpy()

The output has a 0.97 score for a positive label indicating that the text was a positive comment.

We save the fine-tuned model using trainer.save_model() to a directory called "CustomModel". Later, we load the saved model using BertForSequenceClassification.from_pretrained() and move it to the GPU**:**

trainer.save_model('CustomModel')

We can load the saved model and make predictions on new inputs.

Finally, we provide an example text and tokenize it. We pass the tokenized inputs through the loaded model (

model_2) to obtain the predicted probabilities using the softmax function. The predictions are converted to a numpy array for further processing**:**

model_2 = BertForSequenceClassification.from_pretrained("CustomModel")

model_2.to('cuda')

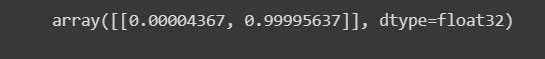

text = "go to hell"

inputs = tokenizer(text, padding=True, truncation=True, return_tensors='pt').to('cuda')

outputs = model_2(**inputs)

predictions = torch.nn.functional.softmax(outputs.logits, dim=-1)

predictions = predictions.cpu().detach().numpy()

Output:

Here we have a 0.999 score for the negative label, indicating that the text represents a negative comment.

This code demonstrates how to fine-tune a pre-trained BERT model on a custom dataset using the Hugging Face Transformers library. The model is trained to classify toxic comments, and evaluation metrics such as accuracy, recall, precision, and F1-score are computed to assess the model's performance. Finally, the trained model is saved and can be loaded later to make predictions on new inputs.

Conclusion

In this blog post, we have explored the process of fine-tuning Hugging Face Transformers for NLP tasks using the BERT model. We started by discussing the importance of fine-tuning and how BERT is pre-trained on a large corpus of text to learn general language understanding.

Next, we loaded a dataset, pre-processed it, and split it into training and validation sets. We then tokenized the input sequences using the BERT tokenizer and created a PyTorch dataset. We trained a BERT model on this dataset and evaluated its performance on the validation set.

Finally, we saved the fine-tuned model and tested it on some sample input sequences to see how well it performs on new data.

In conclusion, fine-tuning pre-trained models like BERT using Hugging Face Transformers is a powerful technique for solving a wide range of NLP problems. With the availability of pre-trained models and libraries like Transformers, it has become easier and more efficient to implement state-of-the-art NLP models. The success of these techniques has led to significant advancements in various NLP applications such as sentiment analysis, text classification, and language translation.

To follow the video tutorial visit:

Follow FutureSmart AI to stay up-to-date with the latest and most fascinating AI-related blogs - FutureSmart AI

Looking to catch up on the latest AI tools and applications? Look no further than AI Demos This directory features a wide range of video demonstrations showcasing the latest and most innovative AI technologies. Whether you're an AI enthusiast, researcher, or simply curious about the possibilities of this exciting field, AI Demos is your go-to resource for education and inspiration. Explore the future of AI today with AI Demos