Introduction to Web Scraping for data science

What is Web Scraping

Web scraping is automatically extracting data from websites using specialized software or programming tools. It involves accessing a website's HTML code and extracting information from it, such as text, images, and other media.

Web scraping can be used for a variety of purposes, including market research, data analysis, and content creation. It allows users to gather large amounts of data from multiple sources quickly and efficiently, which can then be used to inform business decisions, gain insights into customer behavior, and more.

Role of Web Scraping in the field of Data Science

Data scientists often need to collect large amounts of data for their projects, and web scraping offers a way to do this quickly and efficiently. Web scraping can be used to collect data from various sources, such as social media, news sites, and e-commerce websites. This data can be analyzed to gain insights into customer behavior, market trends, and other relevant information. The advantages of web scraping for the purpose of data science are -

Large amounts of data can be collected quickly and efficiently.

Data can be collected from various sources, including those that may not offer a direct data export feature.

Web scraping allows for real-time data collection, which is useful for monitoring changes in trends or behavior.

Web scraping can be automated, reducing the need for manual data collection.

Introduction to Web Scraping using Selenium

What is Selenium

Selenium is an open-source web testing framework that was originally developed for testing web applications. However, its powerful browser automation capabilities make it an ideal tool for web scraping as well. Selenium supports multiple programming languages, including Python, Java, C#, and Ruby, making it accessible to developers with different backgrounds and skill sets.

Benefits of using Selenium as a Web Scraper

Flexibility - Selenium allows developers to scrape data from a wide range of websites, including those with complex or dynamic content.

Speed - Selenium can automate repetitive tasks and perform them much faster than manual scraping.

Accuracy - Selenium can ensure that data is scraped accurately and consistently, reducing the risk of errors and inconsistencies.

Scalability - Selenium can be used to scrape data from multiple pages or websites simultaneously, making it a scalable solution for large-scale data extraction.

When to use Selenium over other Scraping Tools.

Imagine you need to scrape data from a website that heavily relies on JavaScript to generate its content, and you're wondering which tool to use. You might be tempted to use a scraper that can only extract static HTML, but that could be like trying to get a message from a locked safe without the combination!

This is where we can leverage the power of Selenium. Selenium allows us to automate interactions with the website as if we were using a browser ourselves. With Selenium, we can click buttons, fill out forms, and navigate between pages, just like a human user would. This is especially useful if we need to scrape data from a website that requires authentication or has complex interactions.

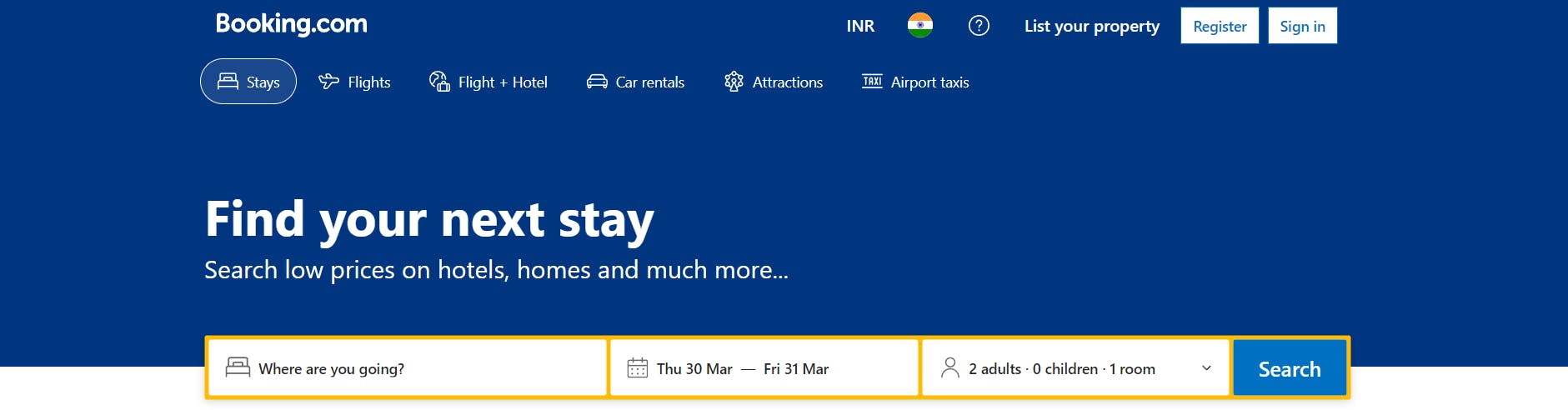

Scraping Booking.com using Selenium

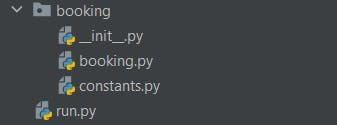

Understanding the file structure of our project

booking.py file contains all the methods for automating the extraction of data from the web page.

constants.py file contains all the sophisticated information like email id and password.

init.py is the default python file

run.py file triggers the methods of the booking.py file

If you want to follow along with this tutorial clone the GitHub Repo - https://github.com/devSubho51347/booking.com-scraper/tree/master

After cloning the repo, open the folder where you have cloned the repo, and then open your terminal and paste the code pip install -r requirements.txt to install all the dependencies required for running our bot

Defining the constants inside the constants.py file

# We can make use of this file by storing constants

# The url which you want to scrape

url = "https://www.booking.com"

# Your email id for authentication

email = "enter email id"

# Your password

password = "enter_your_password"

So we have defined the URL, email id, and password inside the constants.py file. We will be importing these variables into our booking file. We are storing all the constants in a single file so that if we need to make any changes we can just update these values in a single place.

Implementing the scraper bot methods inside the Booking.py file

Now let us dive deep into our scraping bot. All the methods of the scraping bot have been defined inside the booking.py file inside the class Booking.

Importing the required libraries

import os

from selenium import webdriver

from selenium.webdriver.common.by import By

from .constants import url,email,password

from selenium.webdriver.common.keys import Keys

from time import sleep

import pandas as pd

from chromedriver_py import binary_path

webdriver is the main class of the Selenium Python API and is used to automate interactions with a web browser. By is a class that defines various ways to locate elements on a web page, such as by ID, class name, or XPath. Keys is a class that represents keyboard keys and is used to simulate key presses. pandas library is used for basic data manipulation and chromedriver_py allows us to install the latest version of chrome driver for automated testing of web apps.

Next, we will create a class that will inherit the webdriver class. We are doing this so that along with webdriver methods we can also create our custom methods and use them

class Booking(webdriver.Chrome):

def __init__(self, executable_path = binary_path, teardown=False):

self.executable_path = executable_path

self.teardown = teardown

os.environ["PATH"] += self.executable_path

# We want to instantiate the webdriver.Chrome class along the way so we use super

super(Booking, self).__init__()

self.implicitly_wait(15)

# Maximize the window

self.maximize_window()

self.dict = {"Hotel": []}

# This method will automatically close the class

def __exit__(self, exc_type, exc_val, exc_tb):

if self.teardown:

self.quit()

def get_first_page(self):

self.get(url)

def select_place_to_go(self):

search_field = self.find_elements(By.CSS_SELECTOR, "#\:Ra9\:")[0]

print("Enter the name of the place:")

place = input()

search_field.send_keys(place, Keys.ENTER)

def get_hotels_info(self):

## Add the scroll functionality

self.find_element(By.TAG_NAME, 'body').send_keys(Keys.CONTROL + Keys.HOME)

scroll = 12

sleep(2)

for i in range(scroll):

self.find_element(By.TAG_NAME, "html").send_keys(Keys.SPACE)

sleep(1)

## Extracting hotel names

hotel_names = self.find_elements(By.CSS_SELECTOR, ".fcab3ed991.a23c043802")

print(len(hotel_names))

for ele in hotel_names:

self.dict["Hotel"].append(ele.text)

print(self.dict)

print(len(self.dict["Hotel"]))

nxt_button = self.find_element(By.CSS_SELECTOR, ".f32a99c8d1.f78c3700d2")

print(nxt_button)

nxt_button = nxt_button.find_element(By.TAG_NAME, "button")

nxt_button.click()

def create_dataframe(self):

df = pd.DataFrame(self.dict)

df.to_csv("mumbai_hotels1.csv")

Now let us understand the above code in detail - Let us begin with the __init__ method. This is the constructor of our class. This method is going to be called immediately once we instantiate an instance of this class. The parameter executable_path basically contains the path of the latest chrome driver. the super() method is used to instantiate the webdriver.Chrome class along the way. The Implicit Wait in Selenium is used to tell the web driver to wait for a certain amount of time before it throws a “No Such Element Exception”. self.dict = {"Hotel": []} is used to store the scraped data(In this tutorial, we are storing the names of the hotel given a user provide the destination)

def get_first_page(self):

self.get(url)

The above method helps to navigate to the specified URL and loads the URL in the Chrome browser.

def select_place_to_go(self):

search_field = self.find_elements(By.CSS_SELECTOR, "#\:Ra9\:")[0]

print("Enter the name of the place:")

place = input()

search_field.send_keys(place, Keys.ENTER)

select_place_to_go method provides the user with the option to specify the destination. We can achieve this in three steps.

Locate the search field which is being done by the selenium find_elements method. We are locating the element by identifying its CSS and since we are getting a list of elements as output so we are selecting the first element by providing the index.

Take input from the user

Send the user input to the search field. This is achieved using the send_keys method. We have provided two arguments for the method, the place where the user wants to go and Keys.ENTER which is used to simulate the enter action of the keyboard

def get_hotels_info(self):

## Add the scroll functionality

self.find_element(By.TAG_NAME, 'body').send_keys(Keys.CONTROL + Keys.HOME)

scroll = 12

sleep(2)

for i in range(scroll):

self.find_element(By.TAG_NAME, "html").send_keys(Keys.SPACE)

sleep(1)

## Extracting hotel names

hotel_names = self.find_elements(By.CSS_SELECTOR, ".fcab3ed991.a23c043802")

print(len(hotel_names))

for ele in hotel_names:

self.dict["Hotel"].append(ele.text)

print(self.dict)

print(len(self.dict["Hotel"]))

nxt_button = self.find_element(By.CSS_SELECTOR, ".f32a99c8d1.f78c3700d2")

print(nxt_button)

nxt_button = nxt_button.find_element(By.TAG_NAME, "button")

nxt_button.click()

The above method is used to extract all the hotel names from the web page, store those names in the dictionary self.dict and finally move on to the next page.

def create_dataframe(self):

df = pd.DataFrame(self.dict)

df.to_csv("mumbai_hotels1.csv")

Finally, after scraping all the data, we want to perform analysis on the data so we need to store the data in tabular format. For that purpose we are converting the dictionary into pandas dataframe using the pandas DataFrame method and then converting and storing the resulting dataframe as a CSV file

Executing the bot using the run.py file

This file is used to invoke the methods of the booking.py file

from booking.booking import Booking

with Booking(teardown = False) as bot:

bot.get_first_page()

print("Closing the browser")

# bot.sign_in()

bot.select_place_to_go()

i = 0

no_of_pages_to_scrape = int(2)

while i < no_of_pages_to_scrape:

bot.get_hotels_info()

i = i + 1

bot.create_dataframe()

In the first line, we are importing the Booking class which we have defined inside the booking.py file. In the next line, we are defining context managers. Basically, we are instantiating the bot as an object of the class Booking and when the python code comes out of the indentation then the magic method __exit__() will be called which will close the web browser. If we have not defined the context manager then every time we run the code a new web browser would open. Also, we pass the number of pages that we want to scrap inside the no_of_pages_to_scrape variable and finally execute the code.

Summary

In this blog, we have learned about one of the most powerful automation frameworks and how to leverage its power to scrape data from javascript-heavy websites. We then looked at a real-world example where we scraped Booking.com and uploaded the data in a flat-file format. Now it is your time to scrape a website and create a custom dataset for your next data science project

Sample CSV output file -

https://drive.google.com/file/d/1nHRaqPauf5O90LZfrOwNRzxBoNoPh2hI/view?usp=sharing

GitHub Link - https://github.com/devSubho51347/booking.com-scraper/tree/master

AI Demos

Eager to explore the newest AI tools and applications? Discover AI Demos, your destination for a diverse selection of video demonstrations highlighting state-of-the-art AI technologies. Perfect for AI enthusiasts, researchers, or anyone intrigued by the potential of this dynamic field, AI Demos provides an easy-to-navigate resource for learning and inspiration. Dive into the future of AI today by visiting aidemos.com and staying ahead in the world of innovation.