Photo by Christopher Gower on Unsplash

Web Scraping with Beautiful Soup: A Comprehensive Guide for Data Science Projects

Introduction to Web Scraping for Data Science

Definition of Web Scraping

Web Scraping is the process of extracting information from any arbitrary website. Typically we use an automated bot, which takes care of the scraping at a set frequency. The need for Web Scraping could potentially range from keeping track of some particular product on Amazon to collecting data from any website to use as part of a project or a pipeline. Typically, companies use automated tools for such automation to reduce manual intervention.

Overview of the role of web scraping in data science

Data Science is used by almost any company, big or small, in today's world. It's also pretty evident that to do any modeling, we need data, and at times we are just provided with enough data to build a good model. As a model trained on a small is more likely to overfit, we need to look into some alternatives to find some additional data points to solve the problem at hand. In this case, Web Scraping can help a lot in collecting data, which could potentially suffice to solve the problem with some data augmentation techniques.

There could also be cases where the clients don't give the data by themselves; we would need to extract the information from the websites they frequently use for keeping track of stuff. In such cases, we would have to scrap the websites they use to build a tool for their use.

The task need not even be constricted to data science; any app we might build would have to scrap some websites to keep the app up to date and real-time.

Importance of Web Scraping for Dataset Creation

Web Scraping is very important for dataset creation because:

We must have the data to be up to date and capture all the noise in recent days.

To get all the noise, we need to capture a variety of data which is very possible to collect with the help of Web Scraping.

The entire process is automated, requiring little to no human intervention.

Web Scraping helps us make datasets at a very fast rate than manual processes, making it possible to gather even larger datasets.

There are many more reasons why we should adopt Web Scraping, which covers aspects of customizability, capturing time-related information, etc.

The role of BeautifulSoup for web scraping

Beautiful Soup is a Python library for web scraping and data extraction from HTML and XML documents. It helps to parse and process web pages and extract the information required for data analysis and other purposes.

Beautiful Soup helps us do various tasks like parsing, data extraction, data manipulation, etc. It's also very easy to use and easily integrated into any existing pipeline.

Overall, Beautiful Soup is an essential tool for web scraping and data extraction and plays a key role in creating datasets for data analysis and other purposes.

Introduction to BeautifulSoup

pip install bs4

pip install requests

pip install pandas

Benefits of using BeautifulSoup for web scraping

The benefits of using BeautifulSoup for web scraping and data extraction include:

Ease of use

Speed

Flexibility

Integrations, etc.

A real-life example of web scraping

To see how things are done with BeautifulSoup we'll look at a few examples.

Example 1: Scraping all the clinics from Trustpilot

For this example, let's scrape https://www.trustpilot.com/categories/clinics.

url = 'https://www.trustpilot.com/categories/clinics'

Now, we have the base URL from which we have to scrape the information. Let's see how we can do it.

This can be broken into two steps:

Get the page source using the

requestslibrary using theGETrequests.Convert the response to a

BeautilSoupobject.

response = requests.get(url)

response

soup = BeautifulSoup(response.content, 'html.parser')

print(soup.prettify()[:5000])

The above cell would output something like this:

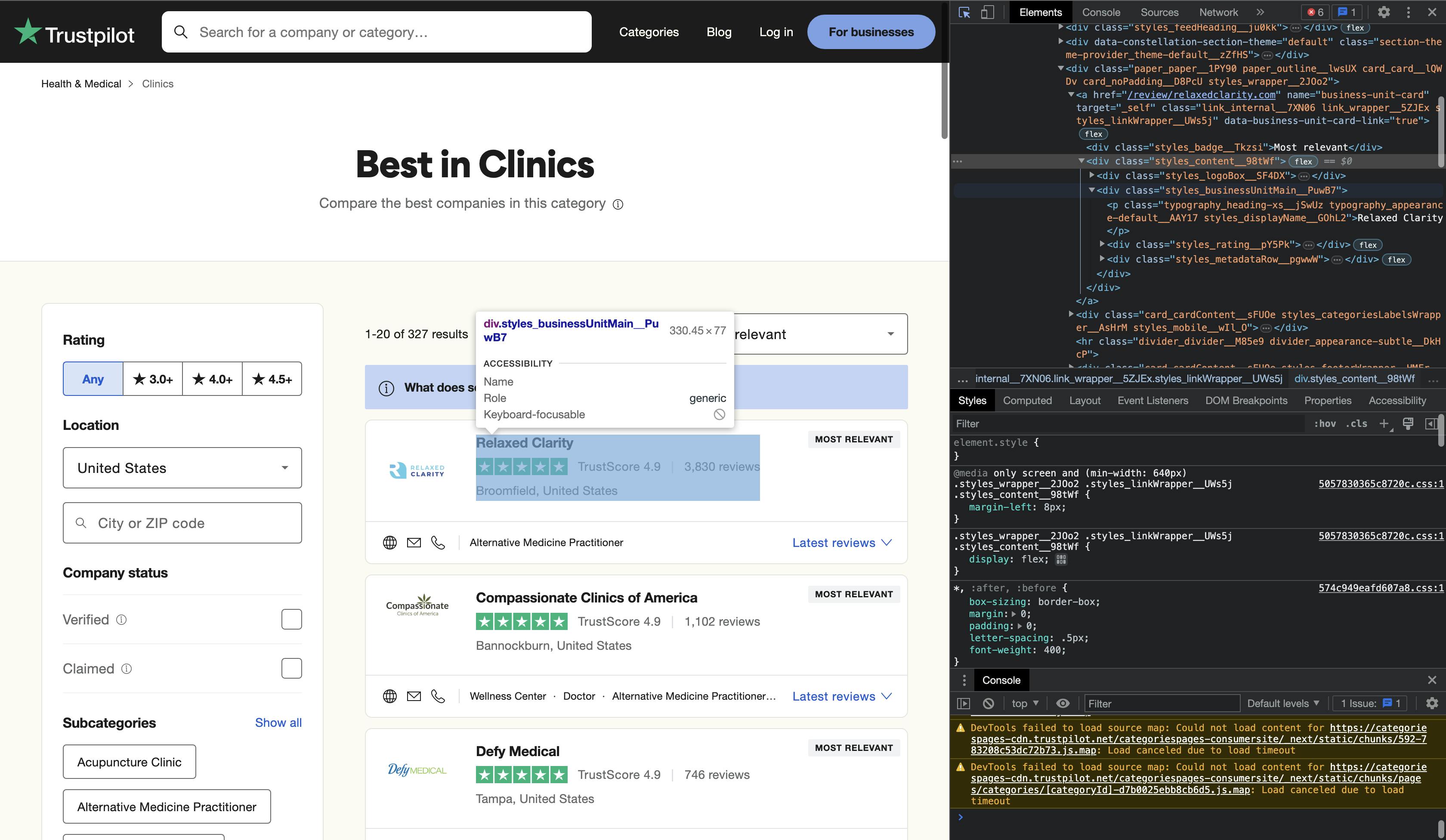

Inspect the page and get the required elements

We need to find the elements we want to find and then inspect the elements to use the appropriate functions to call.

To inspect the web page use the following:

Windows: Control + Shift + C

Mac: Command + Option + I

Once you do this, you should be able to see something like this:

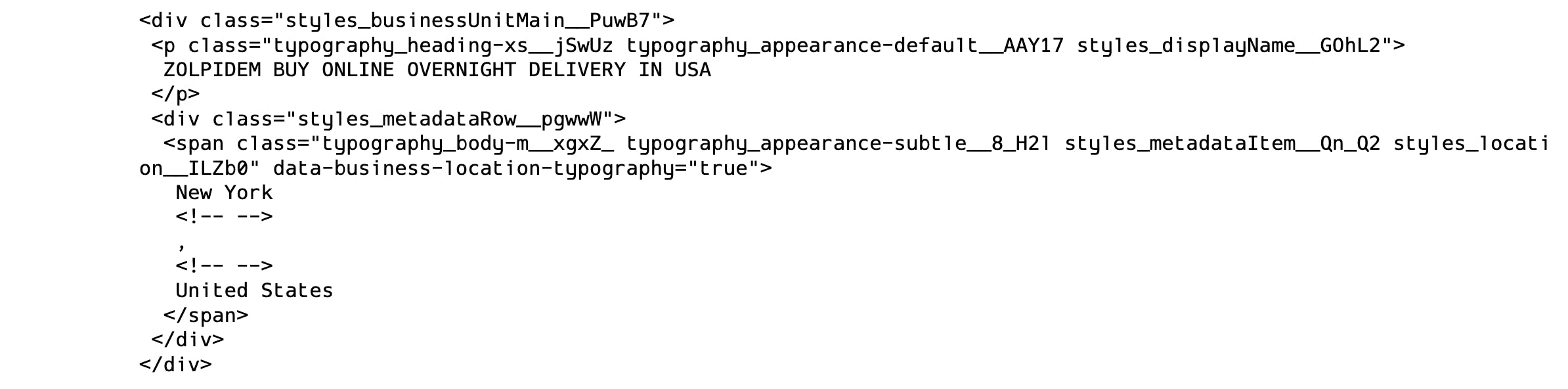

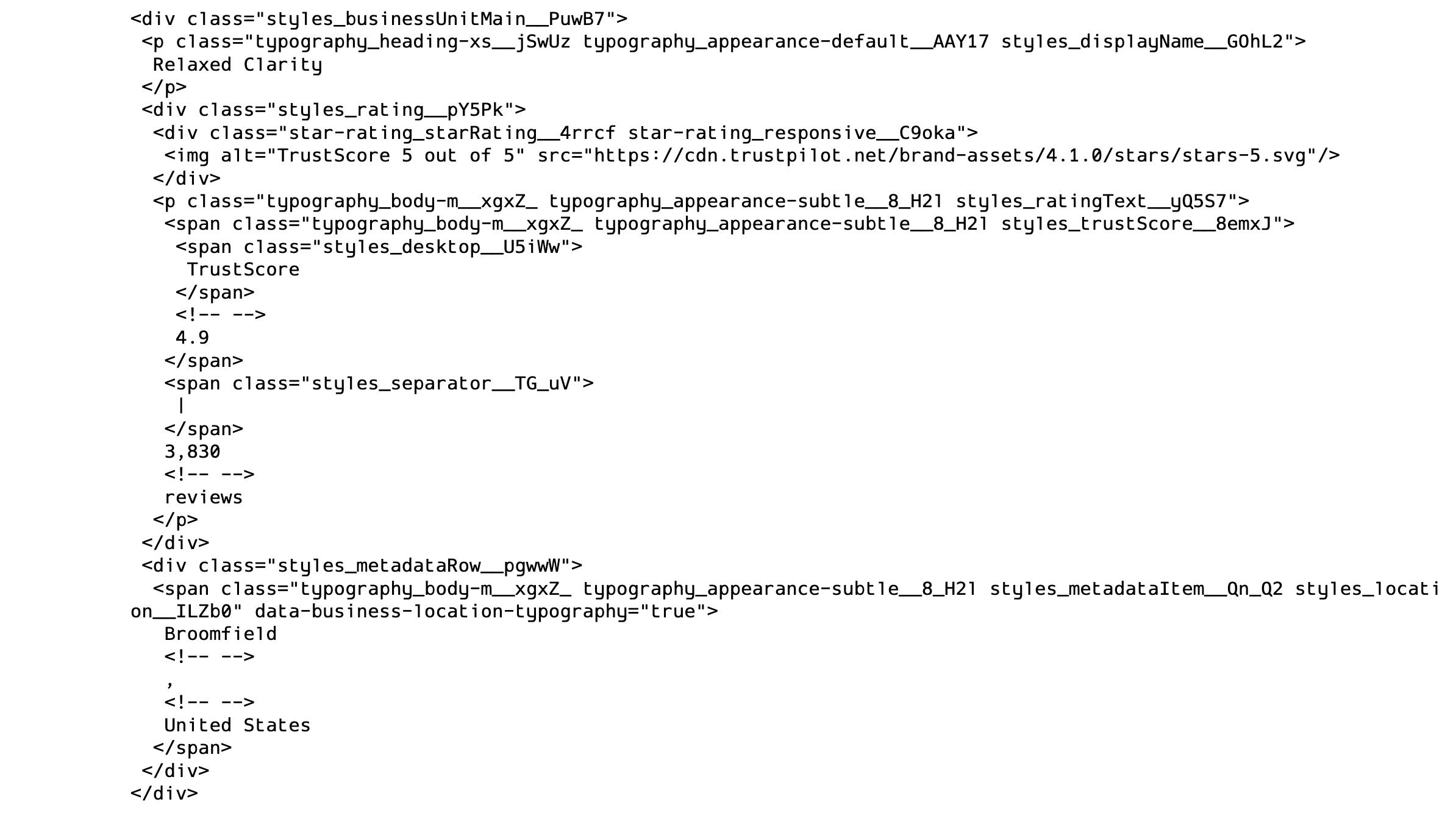

We use soup.select() method to do the task at hand. We also have to provide a CSS selector to find the required data.

To know more about CSS Selectors, take a look at w3schools

clinics = soup.select('div.styles_logoBox__SF4DX~div.styles_businessUnitMain__PuwB7')

len(clinics) # should return 20 in this case

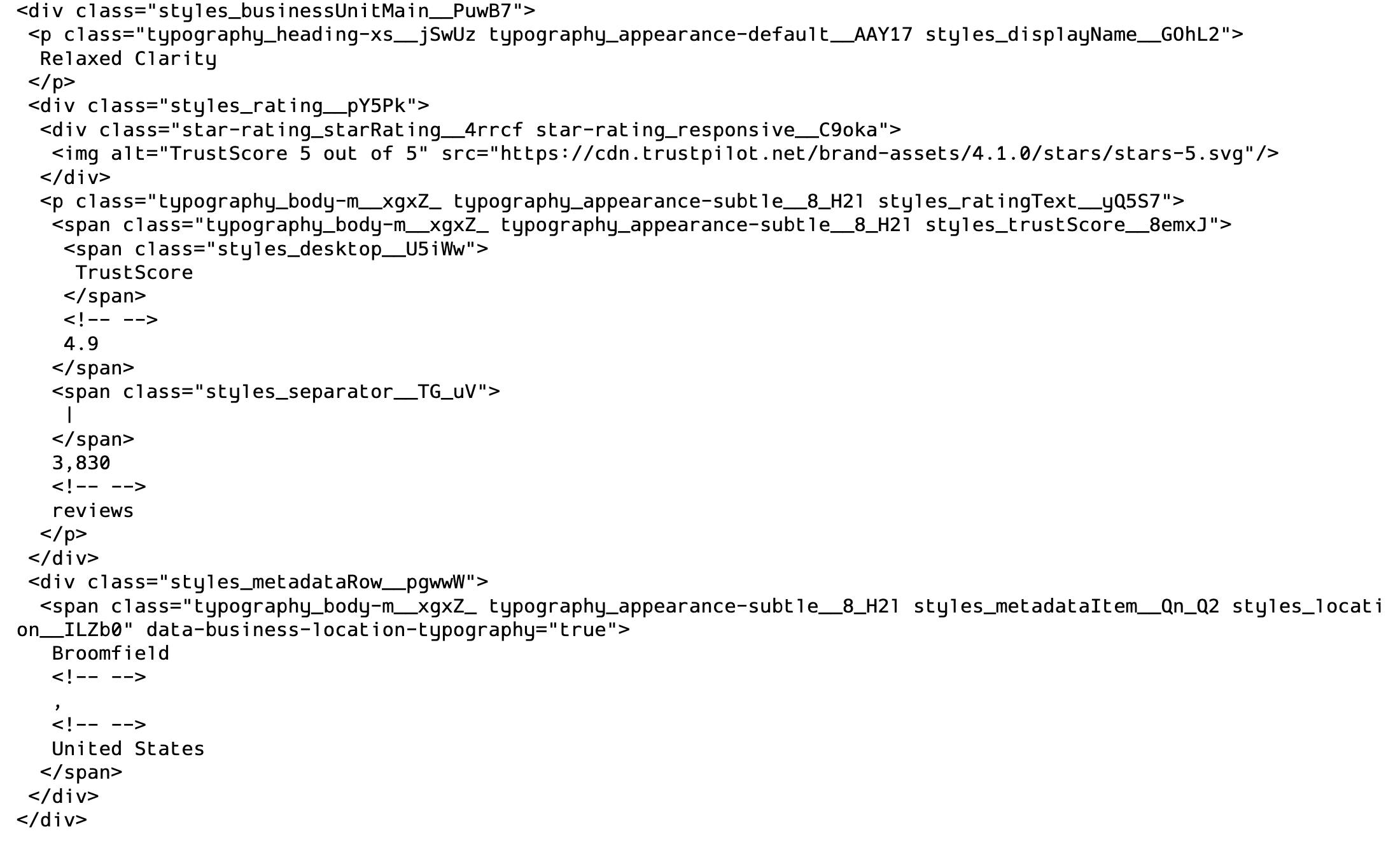

print(clinics[0].prettify())

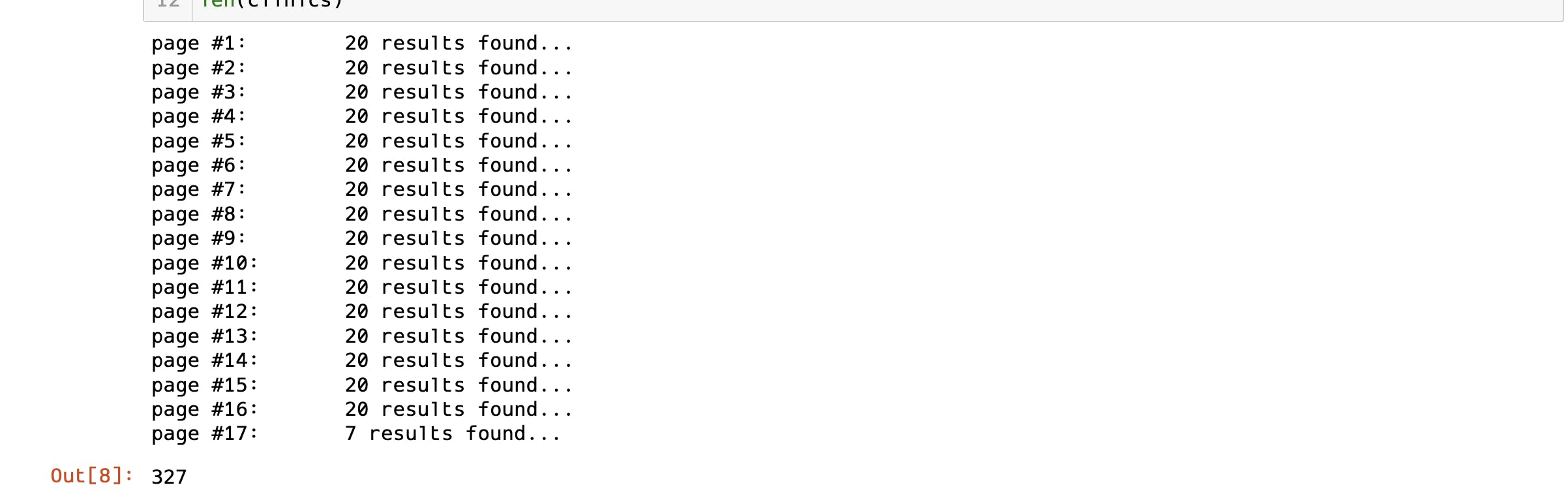

Also, not that there are paginations on the page. There is a simple pattern to solving this. You'll notice it in the page URL.

The last page is page number 17 and would have

?page=17a suffix to the website as a query parameter.- This applies to any URL on this page.

total_num_pages = 17

clinics = []

for page_num in range(1, total_num_pages + 1):

tmp_url = url + '?page=' + str(page_num)

response = requests.get(tmp_url)

soup = BeautifulSoup(response.content, 'html.parser')

tmp_clinics = soup.select('div.styles_logoBox__SF4DX~div.styles_businessUnitMain__PuwB7')

print(f'page #{page_num}:\t{len(tmp_clinics)} results found...')

clinics.extend(tmp_clinics)

len(clinics)

print(clinics[-1].prettify())

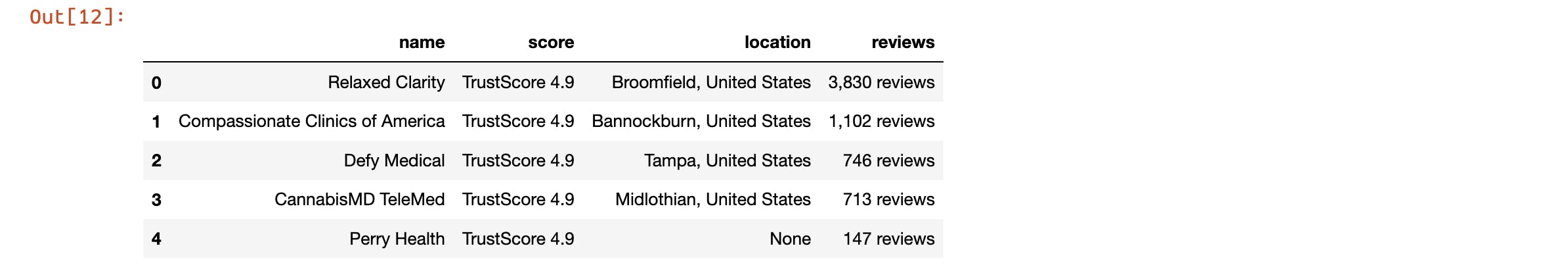

We have now extracted all the information about the clinics; now let's convert it to a data frame format to make it easy to visualize

print(clinics[0].prettify())

name = clinics[0].select('p.styles_displayName__GOhL2')[0].text

score = clinics[0].select('span.styles_trustScore__8emxJ')[0].text

location = clinics[0].select('span.styles_location__ILZb0')[0].text

reviews = clinics[0].select('p.styles_ratingText__yQ5S7')[0].text.split('|')[-1]

name, score, location, reviews

data = {

'name': [],

'score': [],

'location': [],

'reviews': []

}

for clinic in clinics:

data['name'].append(clinic.select('p.styles_displayName__GOhL2')[0].text)

try:

data['score'].append(clinic.select('span.styles_trustScore__8emxJ')[0].text)

except:

data['score'].append(None)

try:

data['location'].append(clinic.select('span.styles_location__ILZb0')[0].text)

except:

data['location'].append(None)

try:

data['reviews'].append(clinic.select('p.styles_ratingText__yQ5S7')[0].text.split('|')[-1])

except:

data['reviews'].append(None)

df = pd.DataFrame(data)

df.head()

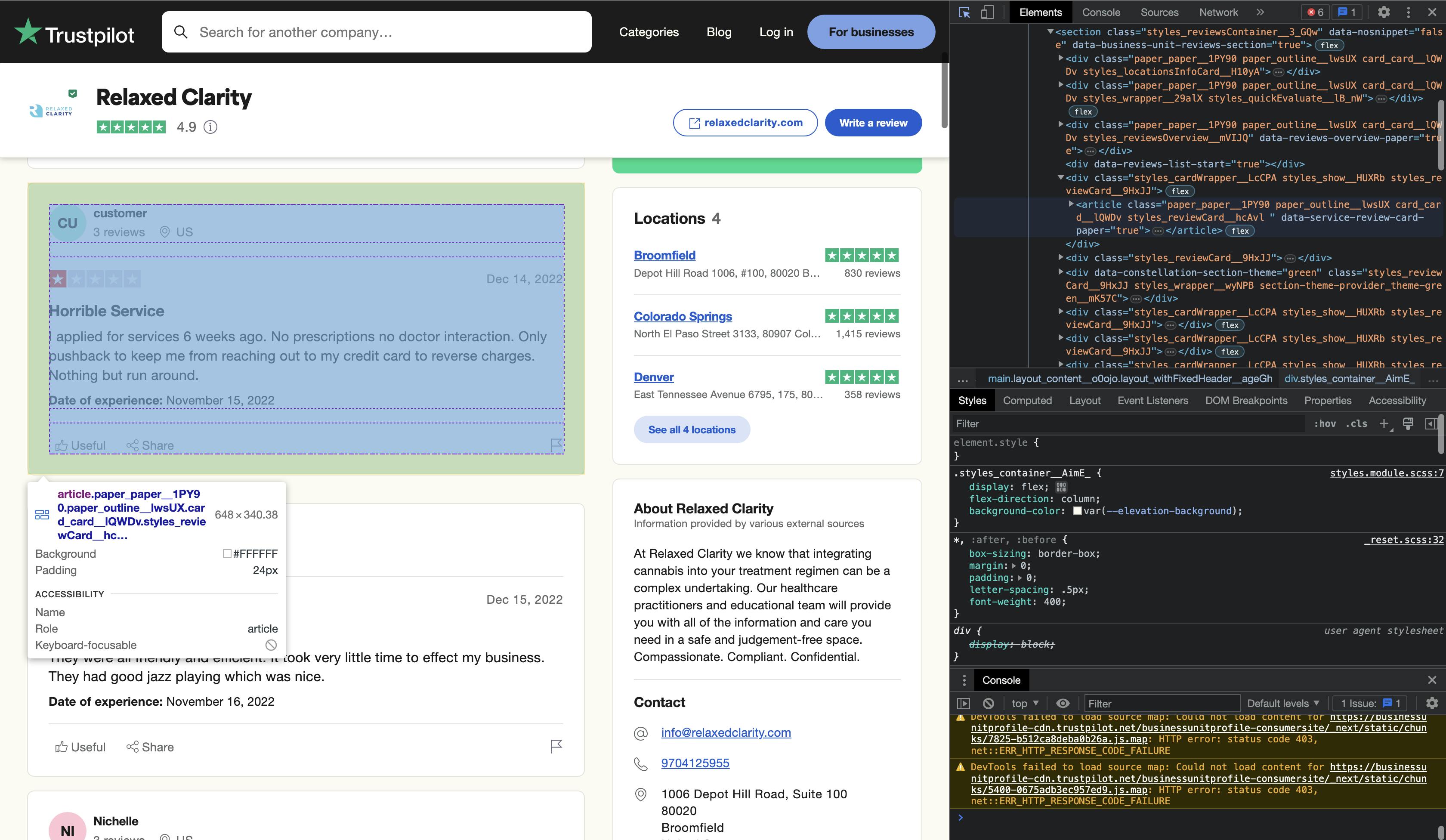

Example 2: Scraping all the reviews from a clinic in Trustpilot

url = 'https://www.trustpilot.com/review/relaxedclarity.com'

This is also quite similar to the previous page... here we'll collect the following details...

Review Title

Review Body

Review Rating

Review Date

In this scenario, we have to query through the article tags.

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

reviews = soup.select('article')

len(reviews) # should return 20

print(reviews[0].prettify())

The above cell should output something like this:

<article class="paper_paper__1PY90 paper_outline__lwsUX card_card__lQWDv styles_reviewCard__hcAvl" data-service-review-card-paper="true">

<aside aria-label="Info for customer" class="styles_consumerInfoWrapper__KP3Ra">

<div class="styles_consumerDetailsWrapper__p2wdr">

<div class="avatar_avatar__hmBp6 avatar_green__y0Z46" data-consumer-avatar="true" style="width:44px;min-width:44px;height:44px;min-height:44px">

<span class="typography_heading-xs__jSwUz typography_appearance-default__AAY17 typography_disableResponsiveSizing__OuNP7 avatar_avatarName__ehkAr">

CU

</span>

</div>

<a class="link_internal__7XN06 link_wrapper__5ZJEx styles_consumerDetails__ZFieb" data-consumer-profile-link="true" href="/users/6390e210e043c20013a5b257" name="consumer-profile" rel="nofollow" target="_self">

<span class="typography_heading-xxs__QKBS8 typography_appearance-default__AAY17" data-consumer-name-typography="true">

customer

</span>

<div class="styles_consumerExtraDetails__fxS4S" data-consumer-reviews-count="3">

<span class="typography_body-m__xgxZ_ typography_appearance-subtle__8_H2l" data-consumer-reviews-count-typography="true">

3

<!-- -->

reviews

</span>

<div class="typography_body-m__xgxZ_ typography_appearance-subtle__8_H2l styles_detailsIcon__Fo_ua" data-consumer-country-typography="true">

<svg class="icon_icon__ECGRl" fill="currentColor" height="14px" viewbox="0 0 16 16" width="14px" xmlns="http://www.w3.org/2000/svg">

<path clip-rule="evenodd" d="M3.404 1.904A6.5 6.5 0 0 1 14.5 6.5v.01c0 .194 0 .396-.029.627l-.004.03-.023.095c-.267 2.493-1.844 4.601-3.293 6.056a18.723 18.723 0 0 1-2.634 2.19 11.015 11.015 0 0 1-.234.154l-.013.01-.004.002h-.002L8 15.25l-.261.426h-.002l-.004-.003-.014-.009a13.842 13.842 0 0 1-.233-.152 18.388 18.388 0 0 1-2.64-2.178c-1.46-1.46-3.05-3.587-3.318-6.132l-.003-.026v-.068c-.025-.2-.025-.414-.025-.591V6.5a6.5 6.5 0 0 1 1.904-4.596ZM8 15.25l-.261.427.263.16.262-.162L8 15.25Zm-.002-.598a17.736 17.736 0 0 0 2.444-2.04c1.4-1.405 2.79-3.322 3.01-5.488l.004-.035.026-.105c.018-.153.018-.293.018-.484a5.5 5.5 0 0 0-11 0c0 .21.001.371.02.504l.005.035v.084c.24 2.195 1.632 4.109 3.029 5.505a17.389 17.389 0 0 0 2.444 2.024Z" fill-rule="evenodd">

</path>

<path clip-rule="evenodd" d="M8 4a2.5 2.5 0 1 0 0 5 2.5 2.5 0 0 0 0-5ZM4.5 6.5a3.5 3.5 0 1 1 7 0 3.5 3.5 0 0 1-7 0Z" fill-rule="evenodd">

</path>

</svg>

<span>

US

</span>

</div>

</div>

</a>

</div>

</aside>

<hr class="divider_divider__M85e9 styles_cardDivider__42s_0 divider_appearance-subtle__DkHcP"/>

<section aria-disabled="false" class="styles_reviewContentwrapper__zH_9M">

<div class="styles_reviewHeader__iU9Px" data-service-review-rating="1">

<div class="star-rating_starRating__4rrcf star-rating_medium__iN6Ty">

<img alt="Rated 1 out of 5 stars" src="https://cdn.trustpilot.net/brand-assets/4.1.0/stars/stars-1.svg"/>

</div>

<div class="typography_body-m__xgxZ_ typography_appearance-subtle__8_H2l styles_datesWrapper__RCEKH">

<time class="" data-service-review-date-time-ago="true" datetime="2022-12-13T21:25:21.000Z">

Dec 13, 2022

</time>

</div>

</div>

<div aria-hidden="false" class="styles_reviewContent__0Q2Tg" data-review-content="true">

<a class="link_internal__7XN06 typography_appearance-default__AAY17 typography_color-inherit__TlgPO link_link__IZzHN link_notUnderlined__szqki" data-review-title-typography="true" href="/reviews/6398d1a1d075435bd8dae197" rel="nofollow" target="_self">

<h2 class="typography_heading-s__f7029 typography_appearance-default__AAY17" data-service-review-title-typography="true">

Horrible Service

</h2>

</a>

<p class="typography_body-l__KUYFJ typography_appearance-default__AAY17 typography_color-black__5LYEn" data-service-review-text-typography="true">

I applied for services 6 weeks ago. No prescriptions no doctor interaction. Only pushback to keep me from reaching out to my credit card to reverse charges. Nothing but run around.

</p>

<p class="typography_body-m__xgxZ_ typography_appearance-default__AAY17 typography_color-black__5LYEn" data-service-review-date-of-experience-typography="true">

<b class="typography_body-m__xgxZ_ typography_appearance-default__AAY17 typography_weight-heavy__E1LTj" weight="heavy">

Date of experience

<!-- -->

:

</b>

<!-- -->

November 15, 2022

</p>

</div>

</section>

</article>

title = reviews[0].select_one('h2.typography_heading-s__f7029').text

time = reviews[0].select_one('time').text

body = reviews[0].select_one('p.typography_body-l__KUYFJ').text

rating = reviews[0].select_one('img')['alt'] # access the attribute as dictionary

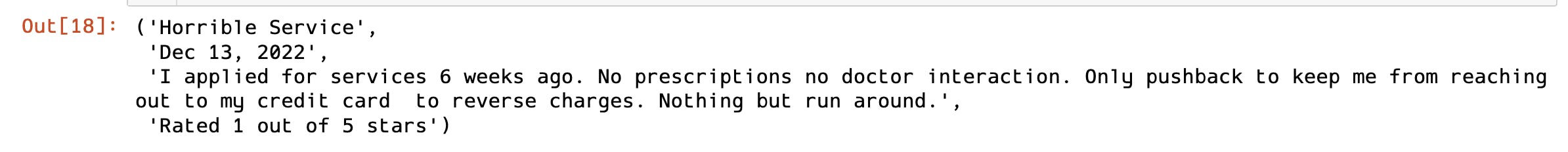

title, time, body, rating

Again this page contains pagination, so we'll do the same thing as before. This time, it has 173 pages.

reviews = []

num_pages = 173

for page_num in range(1, num_pages + 1):

tmp_url = url + '?page=' + str(page_num)

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

tmp_reviews = soup.select('article')

print(f'page #{page_num}:\t{len(tmp_reviews)} results found...')

reviews.extend(tmp_reviews)

data = {

'title': [],

'body': [],

'time': [],

'rating': []

}

for review in reviews:

try:

data['title'].append(review.select_one('h2.typography_heading-s__f7029').text)

except:

data['title'].append(None)

try:

data['time'].append(review.select_one('time').text)

except:

data['time'].append(None)

try:

data['body'].append(review.select_one('p.typography_body-l__KUYFJ').text)

except:

data['body'].append(None)

try:

data['rating'].append(review.select_one('img')['alt'])

except:

data['rating'].append(None)

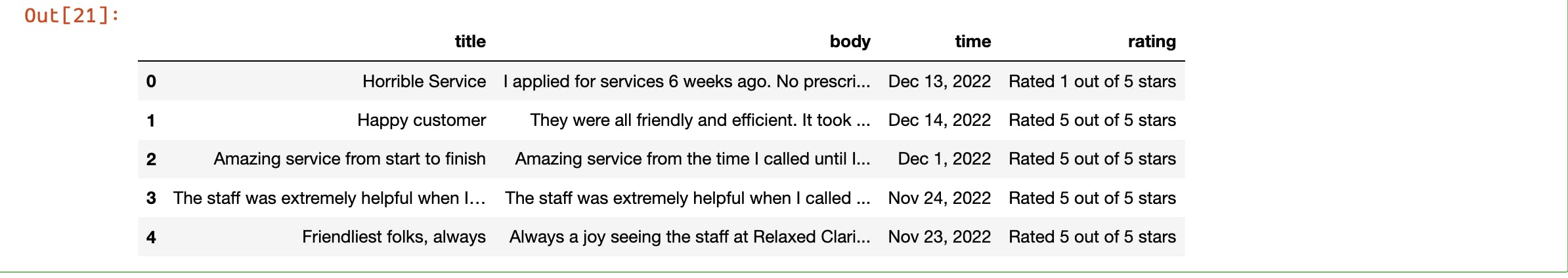

df = pd.DataFrame(data)

df.head()

We hope you found this blog post on web scraping with Beautiful Soup helpful and informative.

If you're interested in learning more about data science, we invite you to check out our YouTube channel. We offer practical NLP tutorials that cover various topics, including GPT-3 and Hugging Transformers.

Also, don't forget to check out our project AI Demos! AIDemos.com is the ultimate directory for video demos of the latest AI tools, technologies, and SaaS solutions.