Introduction

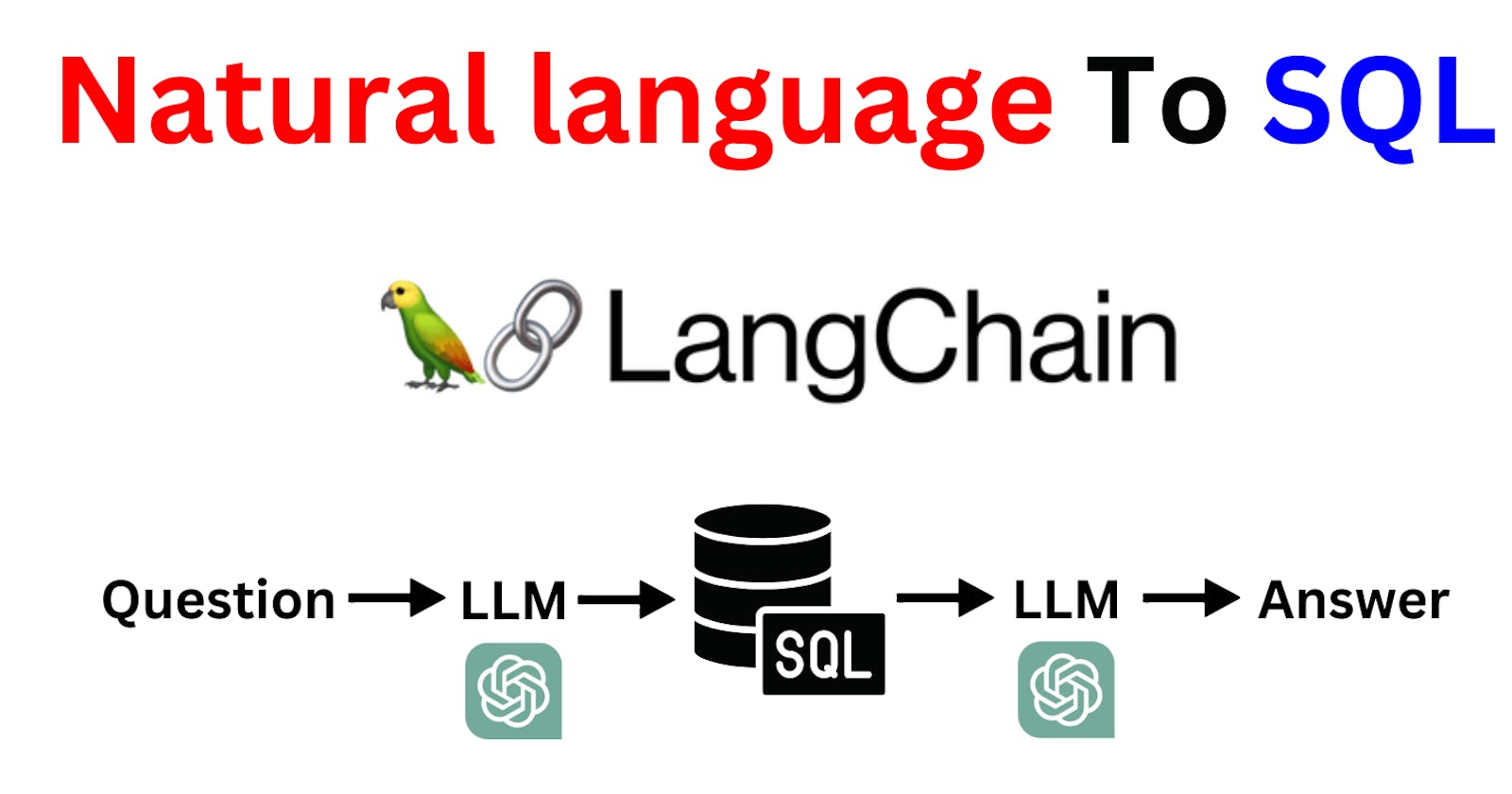

Welcome to our deep dive into revolutionizing the way we interact with databases using Natural Language Processing (NLP) and LangChain. In today's data-driven world, the ability to query databases without needing to know complex SQL syntax opens up a myriad of possibilities across various industries, from healthcare to finance, making data more accessible to everyone.

This blog post aims to guide you through a comprehensive journey to master NL2SQL using LangChain. We will explore the steps necessary to build an intuitive, efficient, and intelligent NL2SQL model that can understand and process natural language queries, dynamically select relevant database tables, and maintain a conversational context to handle follow-up questions effectively.

By the end of this post, you'll have a solid understanding of:

Building a Basic NL2SQL Model: The foundation of translating natural language queries into SQL commands.

Incorporating Few-Shot Learning: Enhancing model accuracy with examples.

Dynamic Few-Shot Example Selection: Tailoring examples to the query context for improved relevance.

Dynamic Relevant Table Selection: Automatically identifying which tables to query based on the natural language input.

Customizing Prompts and Responses: Fine-tuning the model's interaction to provide clear, concise, and relevant answers.

Adding Memory to Chatbots: Enabling the model to handle follow-up questions by remembering the context of the conversation.

Through each of these steps, we'll discuss the concepts, show you how to implement them , and illustrate the outcomes , ensuring you have the tools and knowledge needed to bring the power of NL2SQL to your databases.

Let's embark on this exciting journey to unlock the full potential of your data, making database queries as simple as conversing with a friend.

Building a Basic NL2SQL Model

The first step in our journey to revolutionize database querying with natural language is constructing a basic NL2SQL model using LangChain. This foundational model serves as the cornerstone for more advanced functionalities we'll explore later. Here's how we begin:

Understanding the Basics

At its core, an NL2SQL model aims to translate natural language queries into SQL commands. But how do we start building such a model with LangChain?

Setting Up LangChain

LangChain simplifies the process of creating NL2SQL models by providing a flexible framework that integrates seamlessly with existing databases and natural language processing (NLP) models. To get started, you'll need to:

Install LangChain: Ensure that LangChain is installed in your environment.

pip install langchain_openai langchain_community langchain pymysql chromadb -qConnect to Your Database: The next step involves establishing a connection to your database. LangChain supports various database systems, so you'll likely find your database among the supported ones. You'll use the database credentials to create a connection that LangChain can use to interact with your data

import os os.environ["OPENAI_API_KEY"] = "" db_user = "" db_password = "" db_host = "" db_name = "classicmodels" from langchain_community.utilities.sql_database import SQLDatabase # db = SQLDatabase.from_uri(f"mysql+pymysql://{db_user}:{db_password}@{db_host}/{db_name}",sample_rows_in_table_info=1,include_tables=['customers','orders'],custom_table_info={'customers':"customer"}) db = SQLDatabase.from_uri(f"mysql+pymysql://{db_user}:{db_password}@{db_host}/{db_name}") print(db.dialect) print(db.get_usable_table_names()) print(db.table_info)

The First Query

Once the setup is complete, the real magic begins. You can start by formulating a simple query in natural language, such as "Show me all products priced above $100." LangChain takes this input and, through its integration with language models like ChatGPT and your database, generates an SQL query that precisely captures the intent of your request

from langchain.chains import create_sql_query_chain

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)

generate_query = create_sql_query_chain(llm, db)

query = generate_query.invoke({"question": "what is price of `1968 Ford Mustang`"})

# "what is price of `1968 Ford Mustang`"

print(query)

Seeing the Results

Executing the generated SQL query against your database retrieves the data you're looking for, which LangChain can then present in a user-friendly format.

from langchain_community.tools.sql_database.tool import QuerySQLDataBaseTool

execute_query = QuerySQLDataBaseTool(db=db)

execute_query.invoke(query)

Moving Forward

With the basic NL2SQL model set up, you've taken the first step towards transforming how we interact with databases. However, this is just the beginning. As we progress, we'll explore how to enhance the model's accuracy, handle more complex queries, and even maintain context over a conversation for follow-up questions.

Rephrasing Answers for Enhanced Clarity

After your NL2SQL model successfully executes a SQL query, the next pivotal step is to present the data in a manner that's easily understandable by your users. This is where the art of rephrasing SQL results into clear, natural language answers comes into play. Here's how you can achieve this with LangChain:

Implementing Rephrasing with LangChain

Use Prompt Templates: LangChain allows you to create prompt templates that can guide the model in how to rephrase SQL results. These templates can include placeholders for the original question, the SQL query, and the query result, setting the stage for generating a natural language response

from operator import itemgetter from langchain_core.output_parsers import StrOutputParser from langchain_core.prompts import PromptTemplate from langchain_core.runnables import RunnablePassthrough answer_prompt = PromptTemplate.from_template( """Given the following user question, corresponding SQL query, and SQL result, answer the user question. Question: {question} SQL Query: {query} SQL Result: {result} Answer: """ ) rephrase_answer = answer_prompt | llm | StrOutputParser() chain = ( RunnablePassthrough.assign(query=generate_query).assign( result=itemgetter("query") | execute_query ) | rephrase_answer ) chain.invoke({"question": "How many customers have an order count greater than 5"})

Example: Transforming SQL Results into User-Friendly Responses

Let's consider a user asks, "How many customers have an order count greater than 5?" and the SQL query returns a raw numerical result. The rephrasing process would convert this into a more readable answer, such as "There are 2 customers with an order count of more than 5." This step is vital in closing the loop between user queries and database responses, ensuring that the information provided is both useful and easily digestible

There are 2 customers with an order count of more than 5.

In the next section, we'll dive into the exciting world of few-shot learning and how it can be used to improve the performance of your NL2SQL model with LangChain. Stay tuned to unlock the full potential of natural language database querying.

Enhancing NL2SQL Models with Few-Shot Examples

This technique involves providing the model with a small set of carefully selected examples that demonstrate how to convert natural language questions into SQL queries. Few-shot learning can significantly improve the model's ability to understand and generate precise SQL commands based on user queries, bridging the gap between human language and database querying.

Incorporating Few-Shot Examples into LangChain

Selecting Relevant Examples: The first step is to curate a set of examples that cover a broad range of query types and complexities. These examples should ideally reflect the most common or critical queries your users might perform

examples = [ { "input": "List all customers in France with a credit limit over 20,000.", "query": "SELECT * FROM customers WHERE country = 'France' AND creditLimit > 20000;" }, { "input": "Get the highest payment amount made by any customer.", "query": "SELECT MAX(amount) FROM payments;" }, ..... ]Creating a Few-Shot Learning Template: With LangChain, you can design a prompt template that incorporates these examples into the model's workflow. The template instructs the model to consider the examples when generating SQL queries from new user questions

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder,FewShotChatMessagePromptTemplate,PromptTemplate example_prompt = ChatPromptTemplate.from_messages( [ ("human", "{input}\nSQLQuery:"), ("ai", "{query}"), ] ) few_shot_prompt = FewShotChatMessagePromptTemplate( example_prompt=example_prompt, examples=examples, # input_variables=["input","top_k"], input_variables=["input"], ) print(few_shot_prompt.format(input1="How many products are there?"))Human: List all customers in France with a credit limit over 20,000. SQLQuery: AI: SELECT * FROM customers WHERE country = 'France' AND creditLimit > 20000; Human: Get the highest payment amount made by any customer. SQLQuery: AI: SELECT MAX(amount) FROM payments; ......

The Impact of Few-Shot Learning

By integrating few-shot examples, your NL2SQL model becomes more adept at handling a wider variety of user queries. This not only improves the user experience by providing more accurate and relevant responses but also reduces the potential for errors in SQL query generation.

In the next section, we'll explore the integration of dynamic example selection to further enhance the model's accuracy and relevance, ensuring that your NL2SQL system remains adaptive and responsive to user queries.

Dynamic Few-Shot Example Selection:

This advanced technique tailors the few-shot examples provided to the model based on the specific context of the user's query. It ensures that the guidance offered to the model is not just relevant but optimally aligned with the query's nuances, significantly boosting the model's ability to generate accurate SQL queries.

The Need for Dynamism

Static few-shot examples, though highly effective, have their limitations. Dynamic selection addresses this by intelligently choosing examples that closely match the intent and context of each new query, providing a customized learning experience for the model with every interaction.

Implementing Dynamic Few-Shot Selection

Example Selector Configuration: Begin by setting up an example selector that can analyze the semantics of the user's query and compare it with a repository of potential examples. Tools like semantic similarity algorithms and vector embeddings come into play here, identifying which examples are most relevant to the current query

from langchain_community.vectorstores import Chroma from langchain_core.example_selectors import SemanticSimilarityExampleSelector from langchain_openai import OpenAIEmbeddings vectorstore = Chroma() vectorstore.delete_collection() example_selector = SemanticSimilarityExampleSelector.from_examples( examples, OpenAIEmbeddings(), vectorstore, k=2, input_keys=["input"], ) example_selector.select_examples({"input": "how many employees we have?"}) few_shot_prompt = FewShotChatMessagePromptTemplate( example_prompt=example_prompt, example_selector=example_selector, input_variables=["input","top_k"], ) print(few_shot_prompt.format(input="How many products are there?"))Integrating with LangChain: Integrate the example selector with your LangChain workflow. When a new query is received, the selector determines the most relevant few-shot examples before the model generates the SQL query. This ensures that the guidance provided to the model is tailored to the specific requirements of the query

final_prompt = ChatPromptTemplate.from_messages( [ ("system", "You are a MySQL expert. Given an input question, create a syntactically correct MySQL query to run. Unless otherwise specificed.\n\nHere is the relevant table info: {table_info}\n\nBelow are a number of examples of questions and their corresponding SQL queries."), few_shot_prompt, ("human", "{input}"), ] ) print(final_prompt.format(input="How many products are there?",table_info="some table info")) generate_query = create_sql_query_chain(llm, db,final_prompt) chain = ( RunnablePassthrough.assign(query=generate_query).assign( result=itemgetter("query") | execute_query ) | rephrase_answer ) chain.invoke({"question": "How many csutomers with credit limit more than 50000"})There are 85 customers with a credit limit greater than 50000.

By ensuring that the examples used for guidance are always contextually relevant, the model can generate more precise SQL queries, reducing errors and improving user satisfaction. of NL2SQL technology, making data insights more accessible to everyone.

In the following section, we will explore the integration of dynamic relevant table selection, further advancing our NL2SQL model's capabilities to efficiently parse and respond to user queries.

Dynamic Relevant Table Selection

In the realm of NL2SQL models, especially when dealing with complex databases featuring 100+ tables. With databases growing in complexity and size, it's impractical and costly in terms of prompt token usage to include the schema of every table in the initial prompt for generating SQL queries. The sheer volume of information would overwhelm the model, leading to slower response times and increased computational costs. Dynamic relevant table selection emerges as a solution to this challenge, focusing the model's attention only on the tables pertinent to the user's query.

from operator import itemgetter

from langchain.chains.openai_tools import create_extraction_chain_pydantic

from langchain_core.pydantic_v1 import BaseModel, Field

from typing import List

import pandas as pd

def get_table_details():

# Read the CSV file into a DataFrame

table_description = pd.read_csv("database_table_descriptions.csv")

table_docs = []

# Iterate over the DataFrame rows to create Document objects

table_details = ""

for index, row in table_description.iterrows():

table_details = table_details + "Table Name:" + row['Table'] + "\n" + "Table Description:" + row['Description'] + "\n\n"

return table_details

class Table(BaseModel):

"""Table in SQL database."""

name: str = Field(description="Name of table in SQL database.")

# table_names = "\n".join(db.get_usable_table_names())

table_details = get_table_details()

print(table_details)

Table Name:productlines

Table Description:Stores information about the differ....

Table Name:products

Table Description:Contains de....

Leveraging Smaller, Focused Prompts for Faster Execution

Dynamic relevant table selection hinges on the principle that "less is more." By reducing the scope of information the model needs to consider for each query:

Improved Model Performance: Smaller prompts mean the model has fewer tokens to process, which translates to faster execution times. This is particularly crucial for interactive applications where response time is a key component of user satisfaction.

Enhanced Accuracy: Focusing on only the relevant tables minimizes the risk of generating incorrect SQL queries. This specificity ensures that the model's computational resources are dedicated to understanding and processing only the most pertinent data.

Cost-Efficiency: Reducing the amount of prompt information also means fewer token usage costs. In the context of cloud-based NLP services, where processing costs can accumulate rapidly, this efficiency is not only a technical but also a financial advantage.

table_details_prompt = f"""Return the names of ALL the SQL tables that MIGHT be relevant to the user question. \

The tables are:

{table_details}

Remember to include ALL POTENTIALLY RELEVANT tables, even if you're not sure that they're needed."""

table_chain = create_extraction_chain_pydantic(Table, llm, system_message=table_details_prompt)

tables = table_chain.invoke({"input": "give me details of customer and their order count"})

tables

[Table(name='customers'), Table(name='orders')]

def get_tables(tables: List[Table]) -> List[str]:

tables = [table.name for table in tables]

return tables

select_table = {"input": itemgetter("question")} | create_extraction_chain_pydantic(Table, llm, system_message=table_details_prompt) | get_tables

select_table.invoke({"question": "give me details of customer and their order count"})

['customers', 'orders']

chain = (

RunnablePassthrough.assign(table_names_to_use=select_table) |

RunnablePassthrough.assign(query=generate_query).assign(

result=itemgetter("query") | execute_query

)

| rephrase_answer

)

chain.invoke({"question": "How many cutomers with order count more than 5"})

Enhancing Chatbots with Memory for Follow-up Database Queries

One of the most advanced steps in creating a user-friendly NL2SQL interface is endowing your chatbot with memory. This feature enables the chatbot to handle follow-up questions related to the database intelligently, providing users with a seamless conversational experience. Let's explore how adding memory to your chatbot can revolutionize interactions with your database.

The Significance of Memory in Chatbots

In real-world conversations, context matters. A question might relate to or build upon previous interactions. Similarly, when users interact with a database through a chatbot, their follow-up questions often depend on the context established by earlier queries and responses. A chatbot equipped with memory can retain this context, allowing it to generate more accurate and relevant SQL queries for follow-up questions.

Implementing Memory in Your NL2SQL Model

To equip your NL2SQL model with memory, consider incorporating a chat message history that tracks the conversation's flow. This history should include both the questions posed by the user and the chatbot's responses, enabling the model to reference previous interactions when generating SQL queries for new questions.

Setting Up Message History: Implement a mechanism to record each user query and the corresponding chatbot response. This can be achieved by defining a

ChatMessageHistoryobject that stores this information and can be accessed when neededfrom langchain.memory import ChatMessageHistory history = ChatMessageHistory()Leveraging Previous Interactions: Integrate this message history into your prompt generation process. Before generating a new SQL query, the model should consider the recorded history to understand the conversation's context

final_prompt = ChatPromptTemplate.from_messages( [ ("system", "You are a MySQL expert. Given an input question, create a syntactically correct MySQL query to run. Unless otherwise specificed.\n\nHere is the relevant table info: {table_info}\n\nBelow are a number of examples of questions and their corresponding SQL queries. Those examples are just for referecne and hsould be considered while answering follow up questions"), few_shot_prompt, MessagesPlaceholder(variable_name="messages"), ("human", "{input}"), ] ) print(final_prompt.format(input="How many products are there?",table_info="some table info",messages=[]))Dynamic Prompt Adaptation: Use the chat message history to dynamically adapt the prompts sent to the model for generating SQL queries. This adaptation should include information from previous queries and responses, guiding the model in understanding the context of the follow-up question

generate_query = create_sql_query_chain(llm, db,final_prompt) chain = ( RunnablePassthrough.assign(table_names_to_use=select_table) | RunnablePassthrough.assign(query=generate_query).assign( result=itemgetter("query") | execute_query ) | rephrase_answer )

Example Scenario: Handling Follow-Up Questions

Imagine a user first asks, "How many customers have an order count more than 5?" After receiving the answer, they follow up with, "Can you list their names?" With a memory feature, the chatbot can understand that the second question relates to the subset of customers identified in response to the first question, allowing it to generate an accurate follow-up query without needing the user to re-specify the context.

question = "How many cutomers with order count more than 5"

response = chain.invoke({"question": question,"messages":history.messages})

There are 2 customers with an order count of more than 5.

history.add_user_message(question)

history.add_ai_message(response)

history.messages

[HumanMessage(content='How many cutomers with order count more than 5'),

AIMessage(content='There are 2 customers with an order count of more than 5.')]

response = chain.invoke({"question": "Can you list there names?","messages":history.messages})

response

The names of the customers with more than 5 orders are Mini Gifts Distributors Ltd. and Euro+ Shopping Channel.

Conclusion:

Through this guide, we've journeyed through the process of enhancing NL2SQL models using LangChain, showcasing how to transform natural language queries into precise SQL commands. This exploration not only highlights the power of LangChain in making database queries more accessible but also underscores the broader impact of integrating advanced NLP techniques for intuitive data interaction.

For those interested in delving deeper, a video walkthrough and a comprehensive GitHub notebook and Streamlit Code are available to explore these concepts further. These resources offer visual demonstrations and hands-on examples to help bring these ideas to life in your own projects.

The journey toward more natural and efficient database interactions is ongoing, and with each step, we're making the world of data more accessible to all.

If you're curious about the latest in AI technology, I invite you to visit my project, AI Demos, at aidemos.com. It's a rich resource offering a wide array of video demos showcasing the most advanced AI tools. My goal with AI Demos is to educate and illuminate the diverse possibilities of AI.

For even more in-depth exploration, be sure to visit my YouTube channel at https://www.youtube.com/@aidemos.videos. Here, you'll find a wealth of content that delves into the exciting future of AI and its various applications.